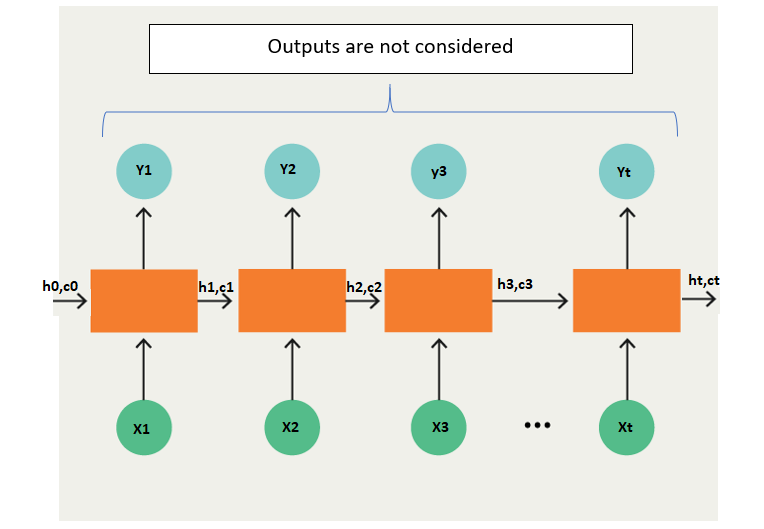

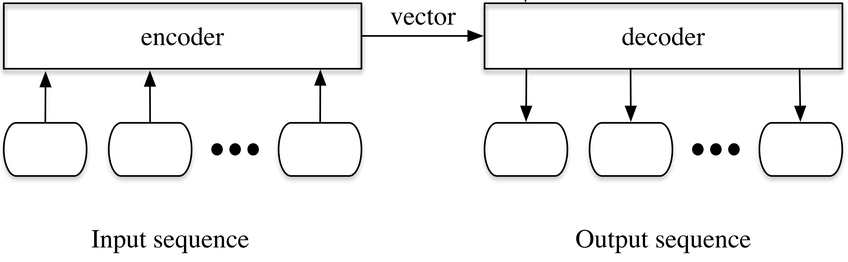

Understanding Encoder-Decoder Sequence to Sequence Model | by Simeon Kostadinov | Towards Data Science

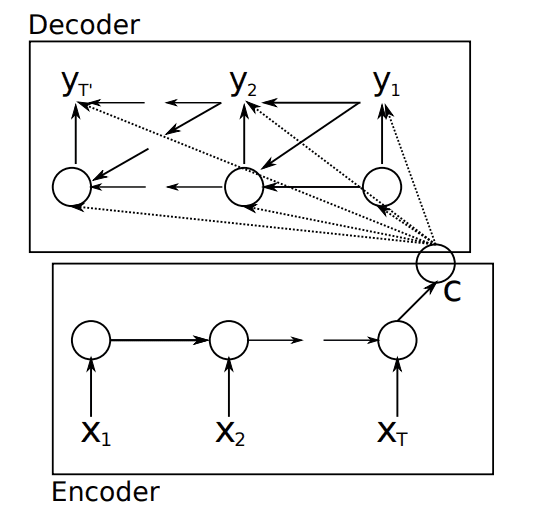

Sequence-to-Sequence Models: Attention Network using Tensorflow 2 | by Nahid Alam | Towards Data Science

10.7. Encoder-Decoder Seq2Seq for Machine Translation — Dive into Deep Learning 1.0.0-beta0 documentation

Baseline sequence-to-sequence model's architecture with attention [See... | Download Scientific Diagram

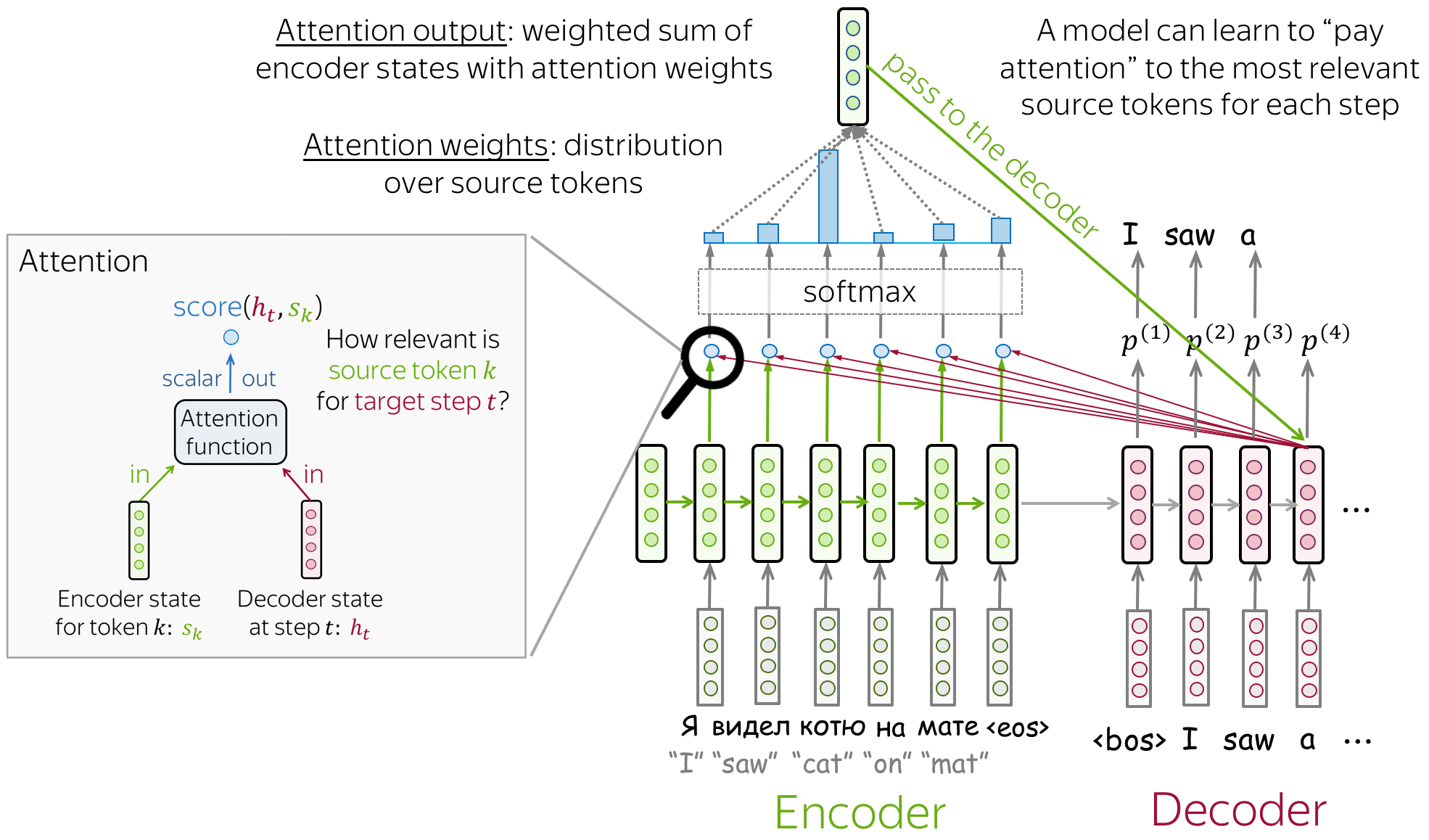

Gentle Introduction to Global Attention for Encoder-Decoder Recurrent Neural Networks - MachineLearningMastery.com

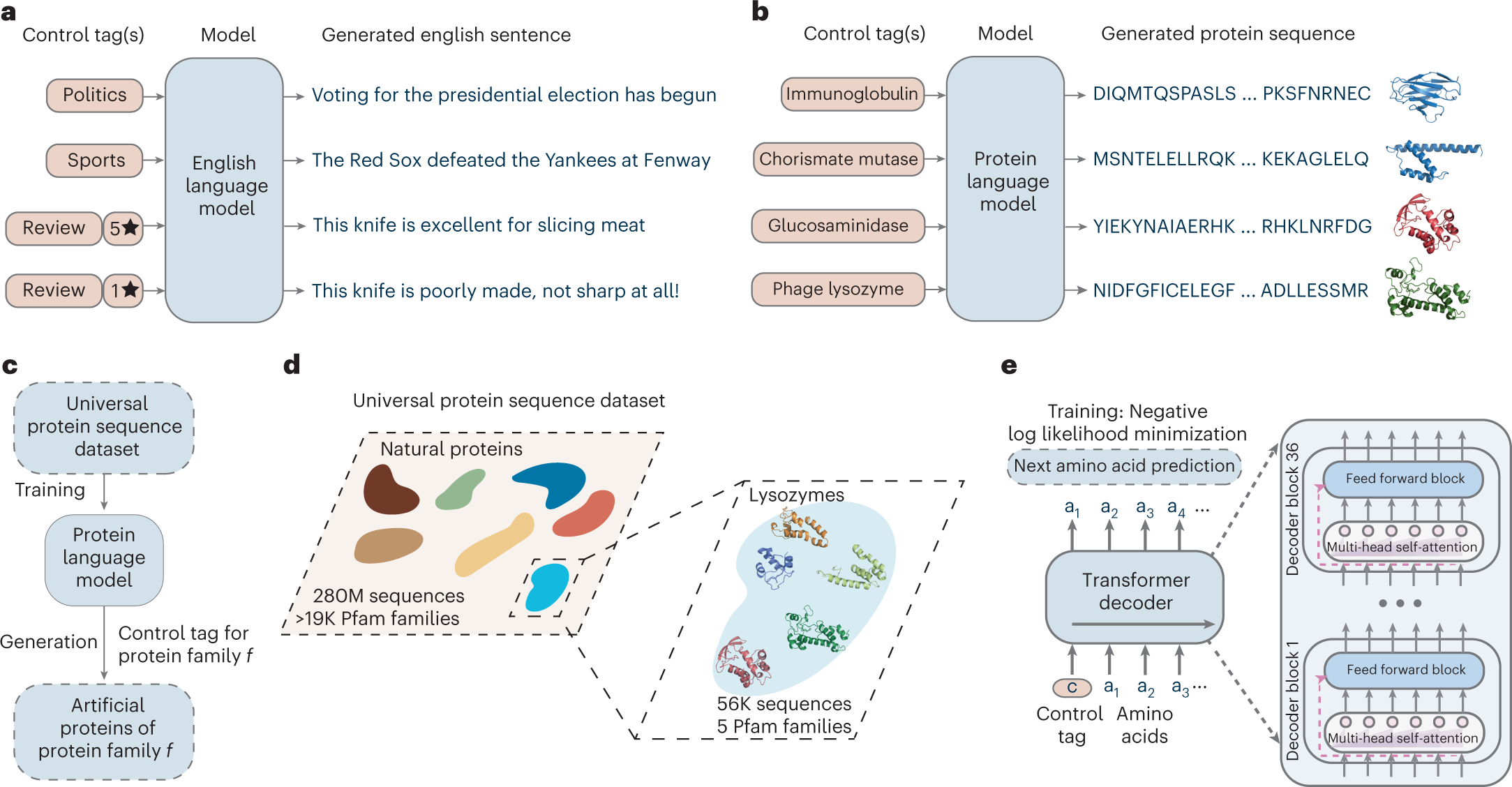

Large language models generate functional protein sequences across diverse families | Nature Biotechnology

NLP Transformers. Natural Language Processing or NLP is a… | by Meriem Ferdjouni | Analytics Vidhya | Medium

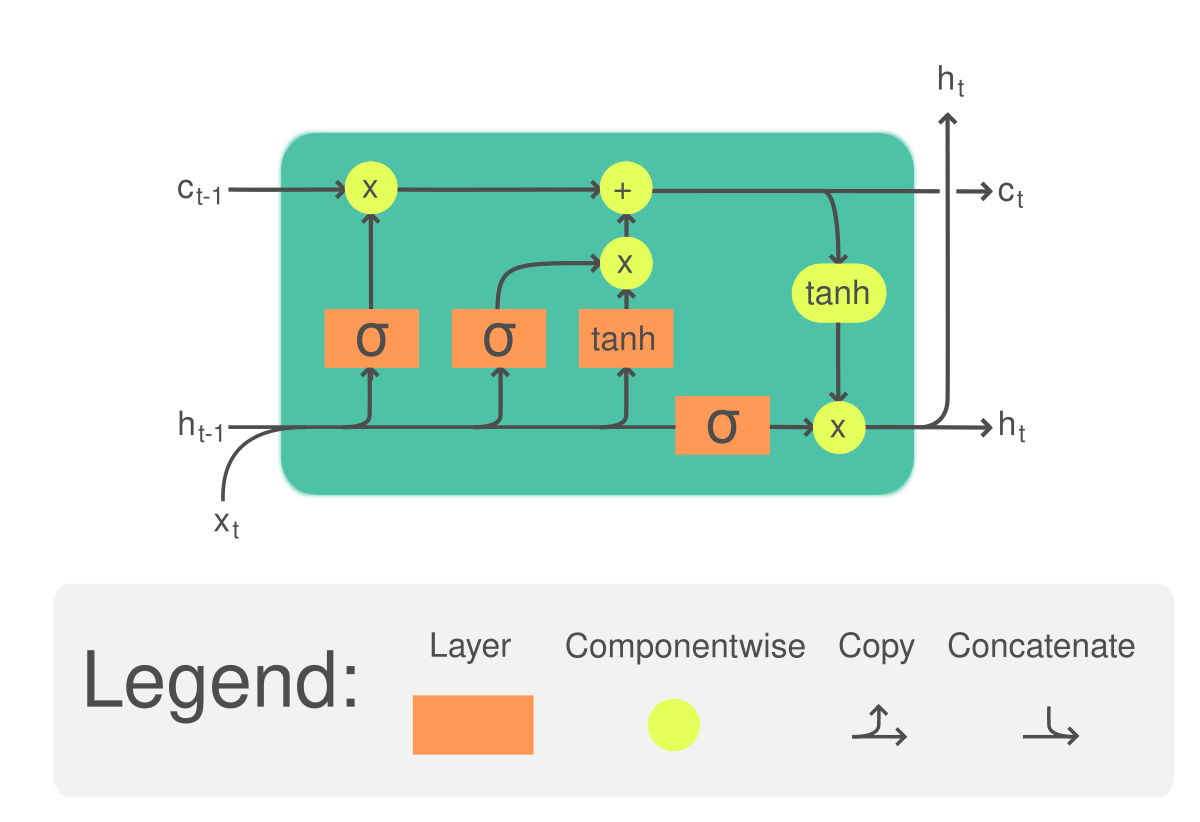

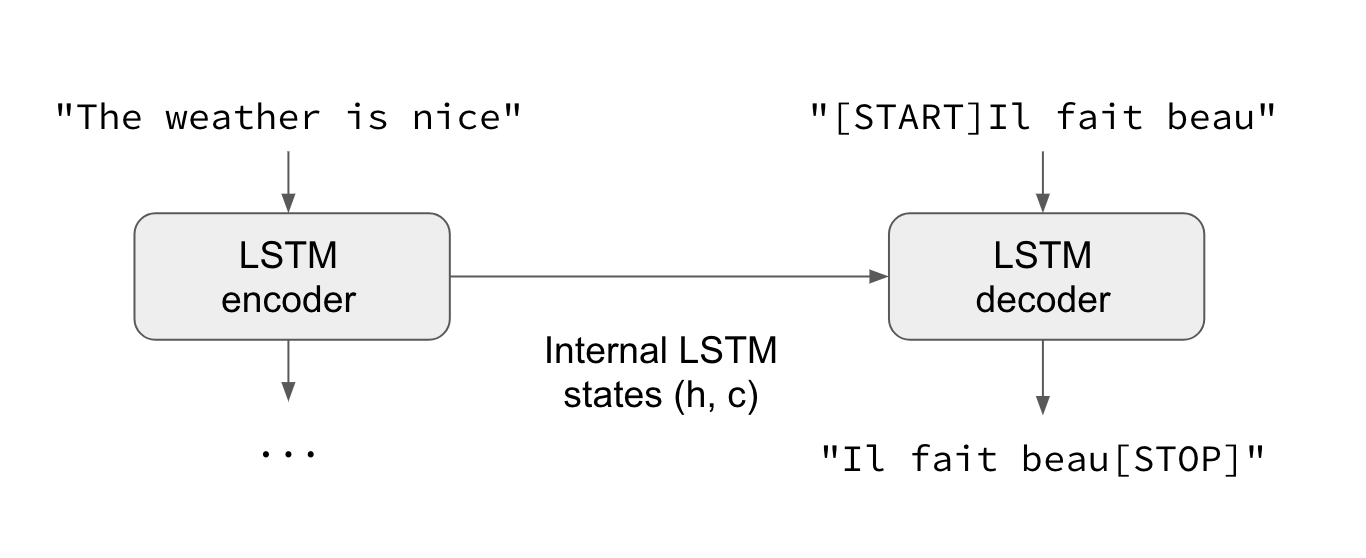

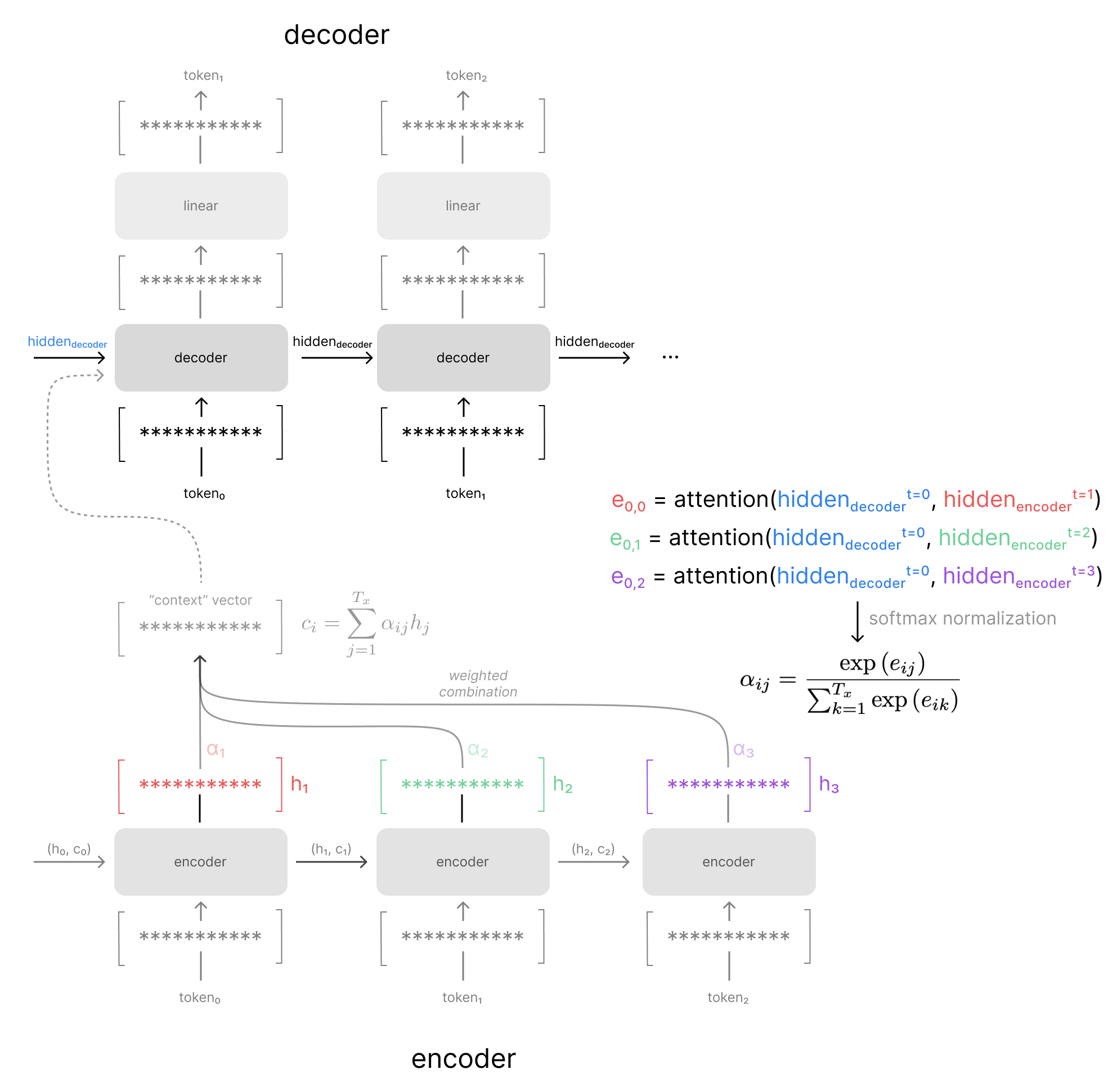

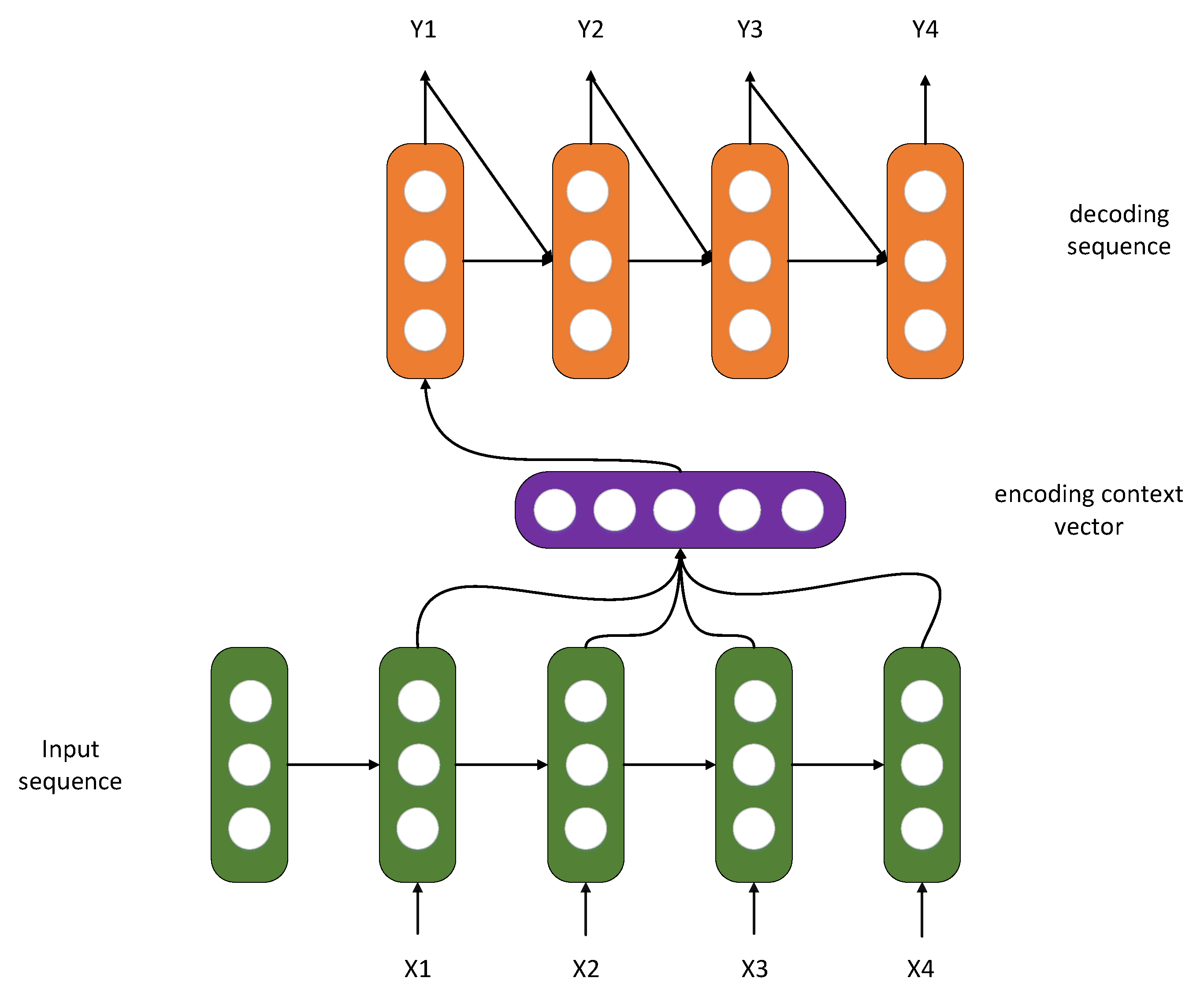

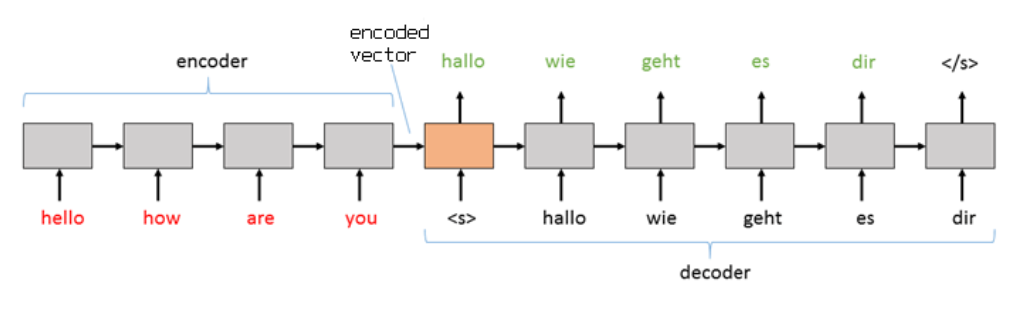

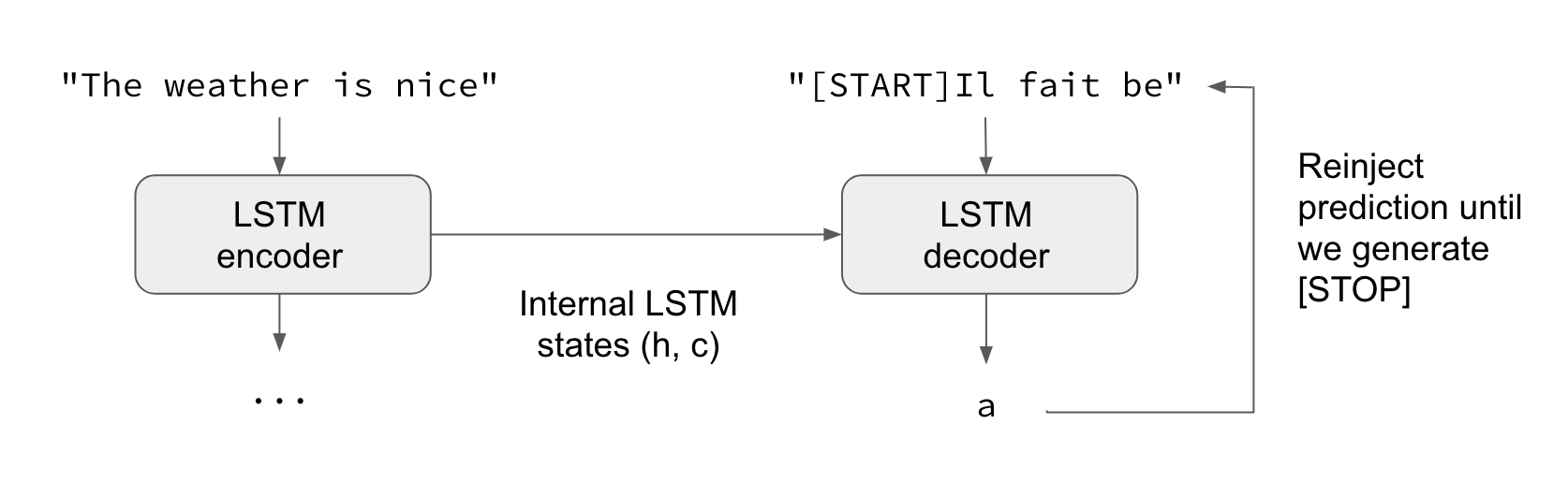

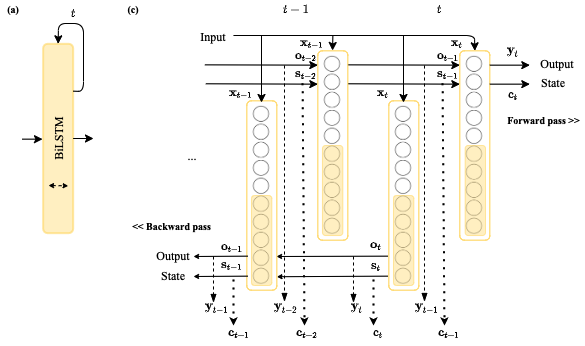

Attention — Seq2Seq Models. Sequence-to-sequence (abrv. Seq2Seq)… | by Pranay Dugar | Towards Data Science