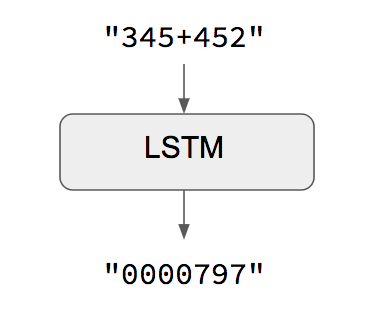

Sequence-to-sequence Autoencoder (SA) consists of two RNNs: RNN Encoder... | Download Scientific Diagram

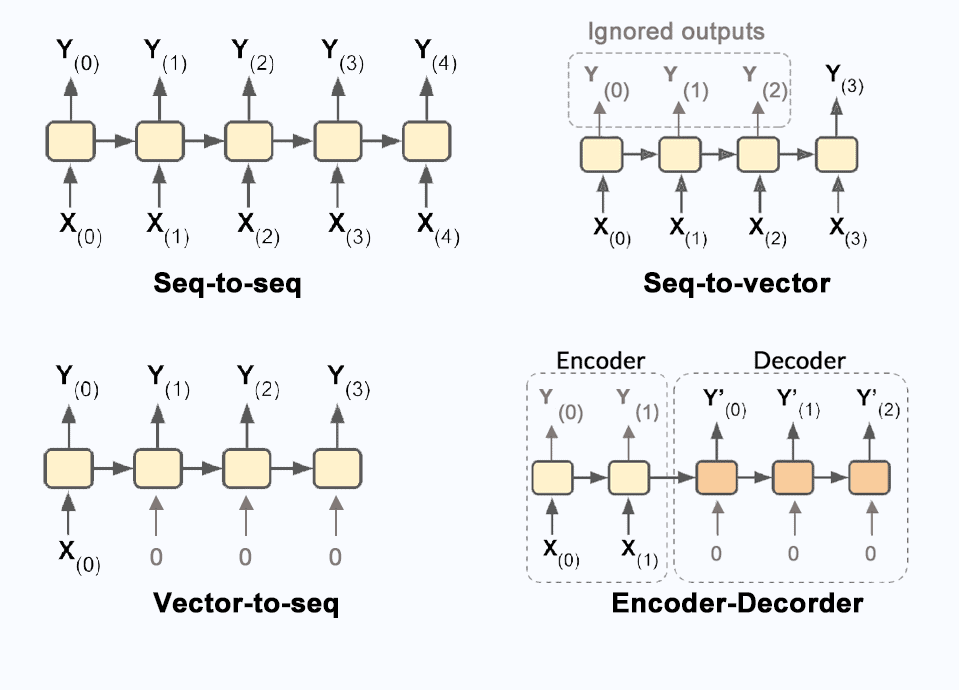

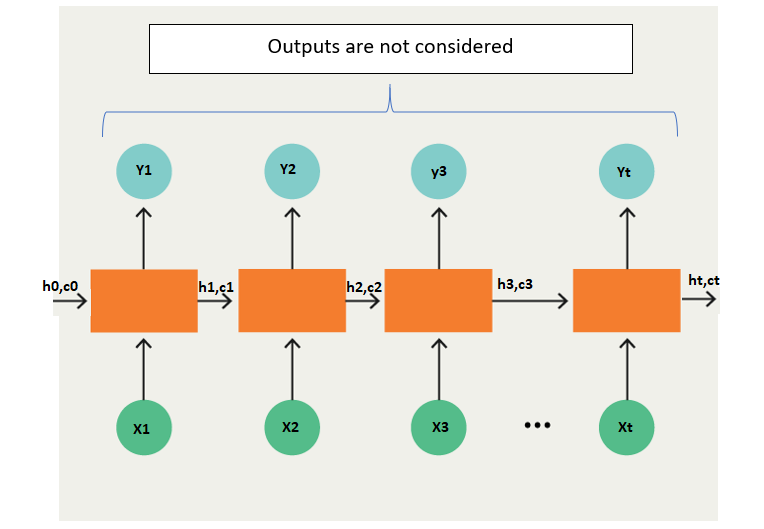

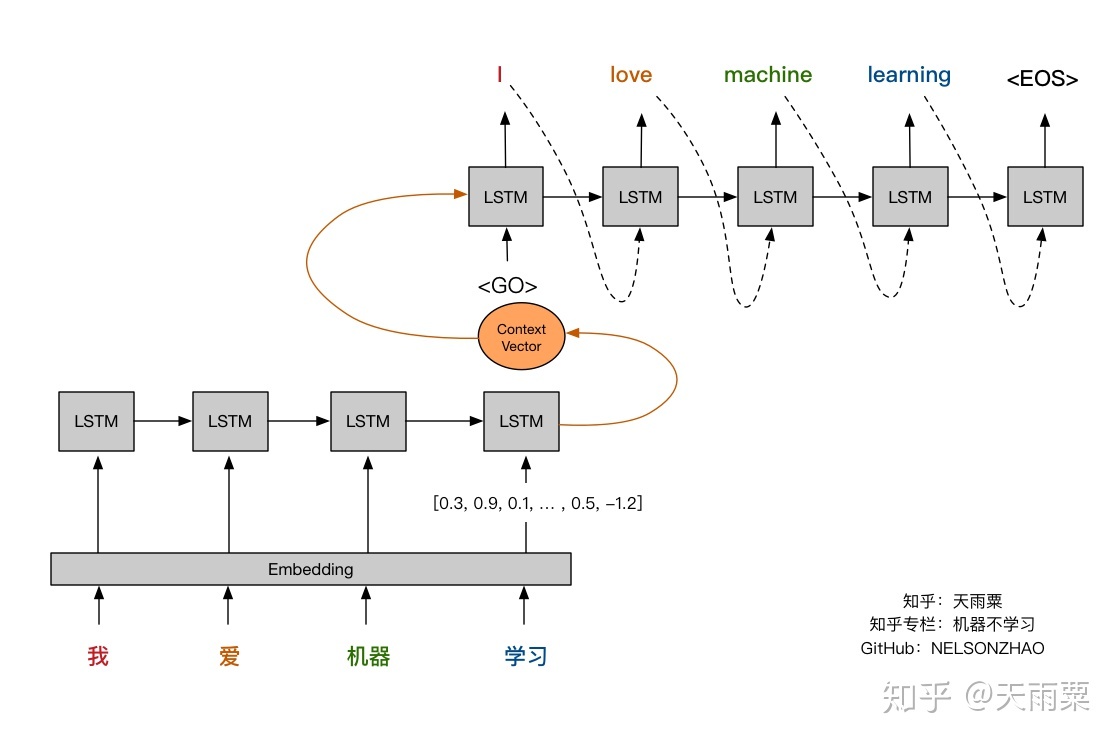

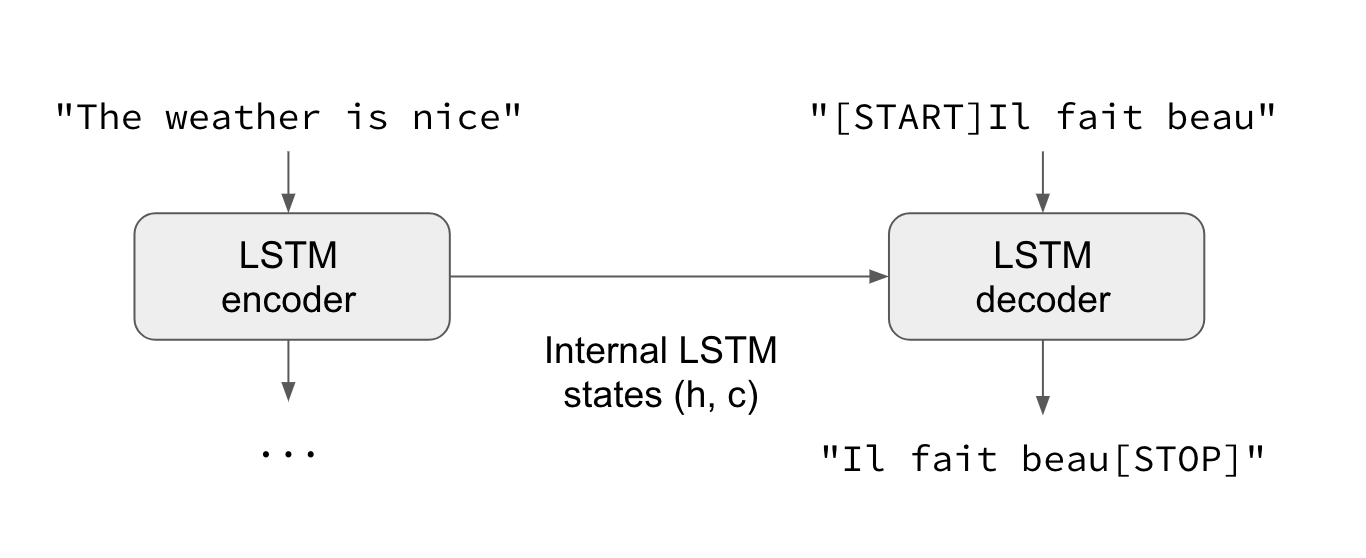

Understanding Encoder-Decoder Sequence to Sequence Model | by Simeon Kostadinov | Towards Data Science

10.7. Encoder-Decoder Seq2Seq for Machine Translation — Dive into Deep Learning 1.0.0-beta0 documentation

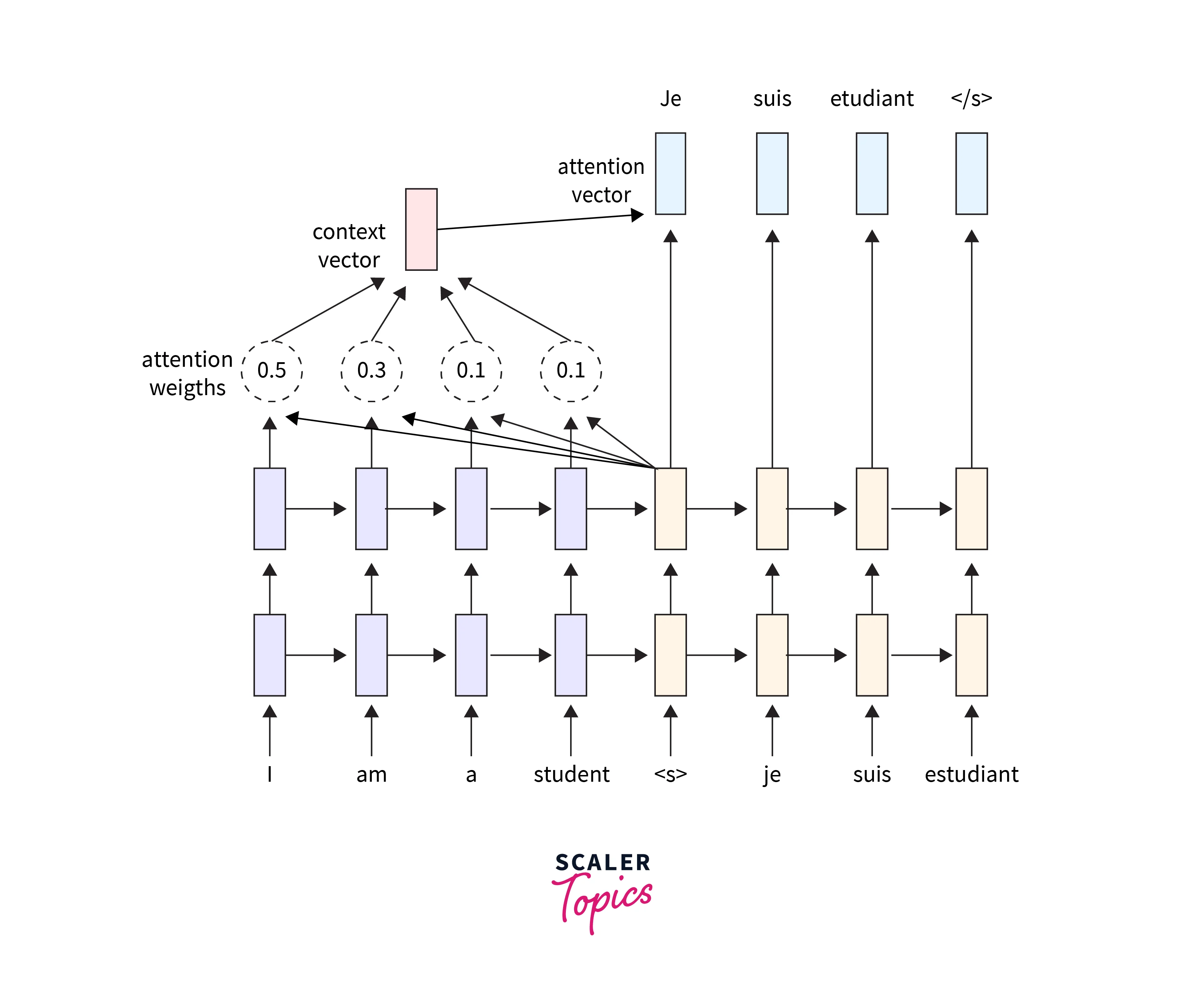

Understanding Attention mechanism and Machine Translation Using Attention-Based LSTM (Long Short Term Memory) Model - MarkTechPost

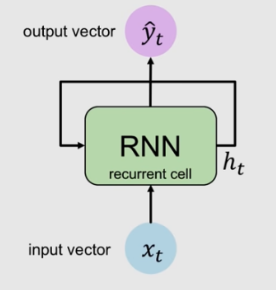

Introduction to RNNs, Sequence to Sequence Language Translation and Attention | by Omer Sakarya | Towards Data Science

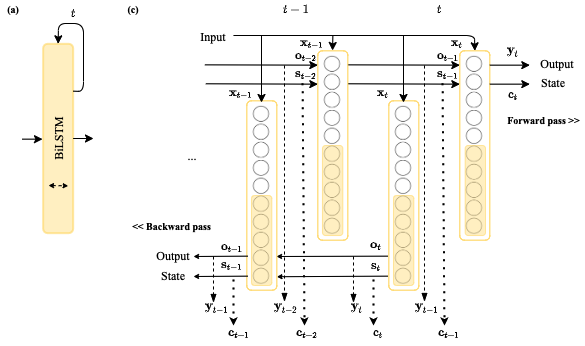

Electronics | Free Full-Text | Sequence to Point Learning Based on an Attention Neural Network for Nonintrusive Load Decomposition

EvoLSTM is a sequence-to-sequence bidirectional LSTM model made of an... | Download Scientific Diagram

Long-Deep Recurrent Neural Net (LD-RNN). The input layer (bottom) is a... | Download Scientific Diagram

![4. Recurrent Neural Networks - Neural networks and deep learning [Book] 4. Recurrent Neural Networks - Neural networks and deep learning [Book]](https://www.oreilly.com/api/v2/epubs/9781492037354/files/assets/mlst_1404.png)